Time willing I would have liked to have a go with PFTrack and from what I can see they dont have a student version, or i just cant find it! If i had a spare £2000 buy it but no such luck.

So I thought I would read up on it instead.

Braught to us by Pixel Farm, a company that manufactures and markets image processing technology for the professional film, TV and Broadcasting market.

PFTrack is Tracking software and after reading the overview on their website, it sounds like an impresive piece of kit. They describe it as the most comprehensive 3D tracking, match moving and scene preparation toolset thats available. So what can you do with it?

Node-based Flowgraph Architecture

‘The Tracking Tree controls the flow of data as nodes are connected to perform all of the various tasks in PFTrack 2012 such as image processing, feature and geometry-based tracking, camera solving, image modelling and file export. Nodes may be infinitely branched allowing multiple techniques to be used to achieve the most accurate result.’

Geometry Tracking

‘Geometry Tracking can be used to track either the camera or a moving object, using a triangular mesh instead of tracking points, which avoids many of the typical pitfalls that plague conventional tracking such as glints, highlights and motion blur. In PFTrack 2012, Geometry Tracking has been enhanced so that it may be used to track a deformable object like a talking face. This can be achieved by creating one or more deformable tracking groups, assigning some of the triangles in the mesh to those groups, and specifying how the groups can transform relative to the rest of the mesh.’

Image Modelling

‘Image Modelling can be used to construct 3D polygonal models that match elements viewed by a tracked camera. A set of modelling primitives are provided that can be positioned in 3D space and edited to match the image data, or new models can be constructed by connecting 3D vertex positions to form a polygon mesh. Z-Depth can be used to estimate the distance of every pixel in an image from the camera frame, producing a grey-scale depth map image encoding z-depth, and a triangular mesh in 3D space. Texture UV maps can be created and edited for any object, and both static and animated textures can be mapped onto geometry for export.’

Stereoscopic Tracking

‘When tracking a stereoscopic camera in PFTrack 2012, auto and user features are tracked simultaneously on both the left and right eye images. When solving the camera, artists have full access to the data defining the rig including interocular distance, convergence, etc.’

Image Processing

‘Already benefiting from the Enhance, Shutter Fix and rotoscoping capabilities of PFMatchit, PFTrack 2012 adds a new Optical Flow tools to calculate dense optical flow fields describing the apparent motion of objects relative in the camera plane. It will also retime clip and motion data to increase or decrease the apparent frame-rate of the camera.’

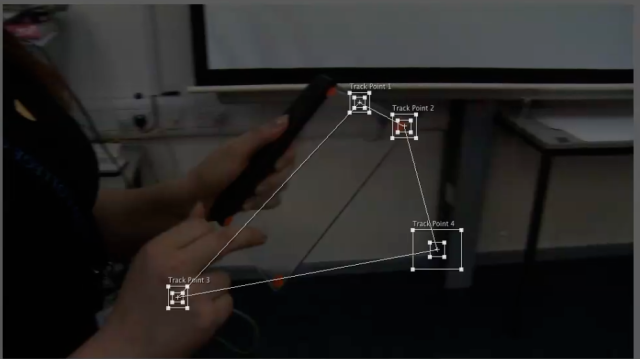

Mocap Solver

‘The Mocap Solver node can be used to calibrate the motion of individual tracking points viewed from two or more camera positions. This is often used to track the motion of an actor’s body or face, where tracking points have been identified using physical markers. In contrast to standard object tracking, the Mocap Solver node does not assume that the object is moving in a rigid fashion. The motion if each tracking point is completely independent and can therefore represent movement of non-rigid objects.’

Reading through the features of PFTrack i realise how little i have touched the surface of tracking software. A lot of this has gone over my head but it is a program i would like to experiment with and look more into depth into match moving and motion tracking.

Since the hiccup with the Faceware plugin not being compatible with Mac, i have been checking other software compatabilitles before hand. So PFTrack is compatible with Mac and Windows.